Cloud services are encouraging poor development practices

On the 28th of February Amazon suffered a brief outage which took several sites offline. This is occasionally to be expected, but it's the sites that rely on Amazon for background processes that give the most cause for concern.

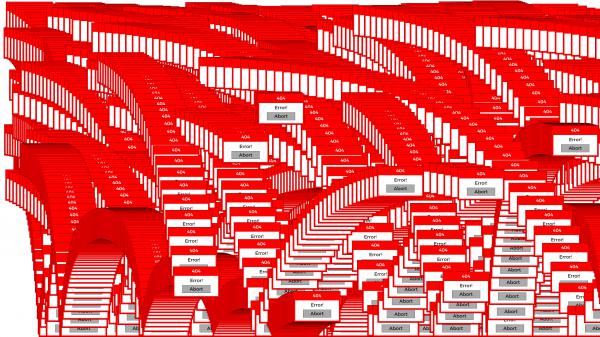

There has been... a problem

Developers are supposed to create a large chunk of code that purely functions as error detection and mitigation. If a customer enters something into a form field which is not allowed by the system, an appropriate warning will tell them so. If you try to navigate to a page that doesn't exist you'll come across a typical 404 page not found page.

Developers are supposed to create a large chunk of code that purely functions as error detection and mitigation. If a customer enters something into a form field which is not allowed by the system, an appropriate warning will tell them so. If you try to navigate to a page that doesn't exist you'll come across a typical 404 page not found page.

Internal processes are somewhat different. As developers we're exposed to the idea that certain errors don't need to be catered for. The only way a require_once function will fail is if the server has lost a disk, the file has been deleted, or the error has always existed. Such an error on a live server will usually result in a blank page and a well configured web application firewall will detect no response or an internal server error, and generate an appropriate 'sorry, we know something is wrong' page.

Catering for the cloud

In recent years more code than ever has been pushed to the cloud. A large number of websites you visit won't store their own images or other assets – they'll be stored on Amazon's S3 cloud. This type of CDN, or content delivery network, has many benefits and is offered by many other providers. Google hosted libraries provide a great resource for developers to link directly to common libraries and frameworks, such as jQuery.

The problem with all of these resources is that developers are treating them like they are part of their own server. Once a piece of code has been tested to be working, no other consideration is given to errors that may occur and this is a problem.

No errors

During the recent S3 outage I was trying to upload images on a website, but nothing was happening. A loading animated gif just sat there and span – there were no errors at all. A typical user, including me, would have had no idea what was wrong. Was the image too large, was it too small or the wrong ratio, was it in a format that the site didn't like for some reason? If this had been my first visit to this website I'd have been well within my rights to assume that the site was just poorly made and broken, leaving never to return. This is definitely not the message you want to send to your client's users.

Too many errors

There was a time a few years ago when Google hosted libraries fell over, and took a large chunk of the internet with it. It seems that few developers cater for remote libraries which fail to load and have no fall back – even those nasty websites which are 100% JavaScript generated like Wix (which also relies on S3), and so functionality fails without warning and whole sites (like Wix based ones) return a blank page.

The load order of JavaScript is incredibly important too, because errors in other scripts will affect your own scripts. For example, if you use advertising and that advertising is allowed to load asynchronously before your code does, and that code relied upon S3 or Google which was suffering an outage and had no error checking for that scenario, then every other script on your page would stop processing correctly. This is such an important point to remember: ignore all third-party requests to put their code in your head above your code. Your code must always come first, and it should also be just before your closing body tag – not in the header. Content should be more important than enhancements...

Progressive enhancement

With modern browsers, frameworks, and ‘web apps' came a loss of experience for good code design techniques. Progressive enhancement was a strong movement in the 2000s which seems to be decidedly lacking now, even though it is more important than ever. If your code is relying on third-party hosted code with no fallbacks then you will suffer the consequences. Even if you think that it's safe for your site to require JavaScript at a minimum (it isn't), you should definitely never be treating remote sources as safe and always available.